I know what it’s like to be excited about a new AI tool. You type in a prompt, hit enter, and wait for the magic to happen. But then, it does happen—and it’s a bit of a letdown. You’re asking for an image of a “doctor,” and every single result is a man.

You’re writing a marketing campaign, and the AI’s language for women feels… well, a little like something out of a 1950s sitcom. That’s been my experience, and it’s led me to a real understanding of the gen AI gender gap.

This issue is more than just a little quirk in the technology. It’s a frustrating reminder that when the people building generative AI lack diversity, the tools they create can be unfair, biased, or simply leave people out.

For me, this impacts my work every single day. I have to spend extra time editing, prompting, and correcting because the AI I’m using isn’t built to understand a world that looks like mine.

In this blog post, I want to share my journey in grappling with this issue. We’ll talk about what the gen AI gender gap really means, look at some of the hard evidence that confirms it, and then discuss what we can all do, both as creators and as users, to push for more inclusive, smarter AI.

My Experience with the Gen AI Gender Gap

In the simplest terms, the gen AI gender gap refers to the huge imbalance we see between men and women, as well as other underrepresented groups, in how generative AI systems are developed, trained, and used. I’ve personally seen this gap show up in two very distinct ways, and they’re both directly tied to the content I create.

First, there’s the lack of diversity among creators. When I look at the teams behind many of the most popular AI models, the reality is that the majority of developers, researchers, and engineers are men. I’ve been to tech conferences where panel after panel on the future of AI was almost exclusively male. This means that women and marginalized groups aren’t fully represented during the critical design and training phases.

This is why when I ask an AI to generate images for a blog post, I often have to specify “a female CEO” or “a diverse group of engineers.” If I don’t, the default is almost always a group of men. That bias is the direct result of the lack of diverse perspectives in the room when the model was being built. It’s like building a house with a blueprint drawn by only one person—it’s going to have a very specific, limited perspective.

Second, there’s the bias in AI outputs. This one really hits home for me as a content creator. Generative AI is trained on enormous datasets scraped from the internet, and let’s be honest, the internet is full of stereotypes.

When an AI learns from billions of articles, books, and social media posts, it absorbs all of that ingrained bias. For instance, it might automatically associate specific roles with a particular gender, a man as a lawyer, a woman as a teacher, simply because those are the patterns that appeared most frequently in its training data.

I’ve had to manually correct headlines, rephrase content, and even discard entire sections of AI-generated text because it felt like a step backward in terms of gender equality. It’s frustrating and time consuming, and it proves that we’re not just dealing with an abstract problem; we’re dealing with a tangible impact on the technology we use to build our digital world.

To me, the gen AI gender gap means that if we don’t bring more diverse voices to the table and actively clean up our biased data, we’re building a future where technology reinforces old prejudices instead of breaking them down.

What the Data Says About the Gen AI Gender Gap

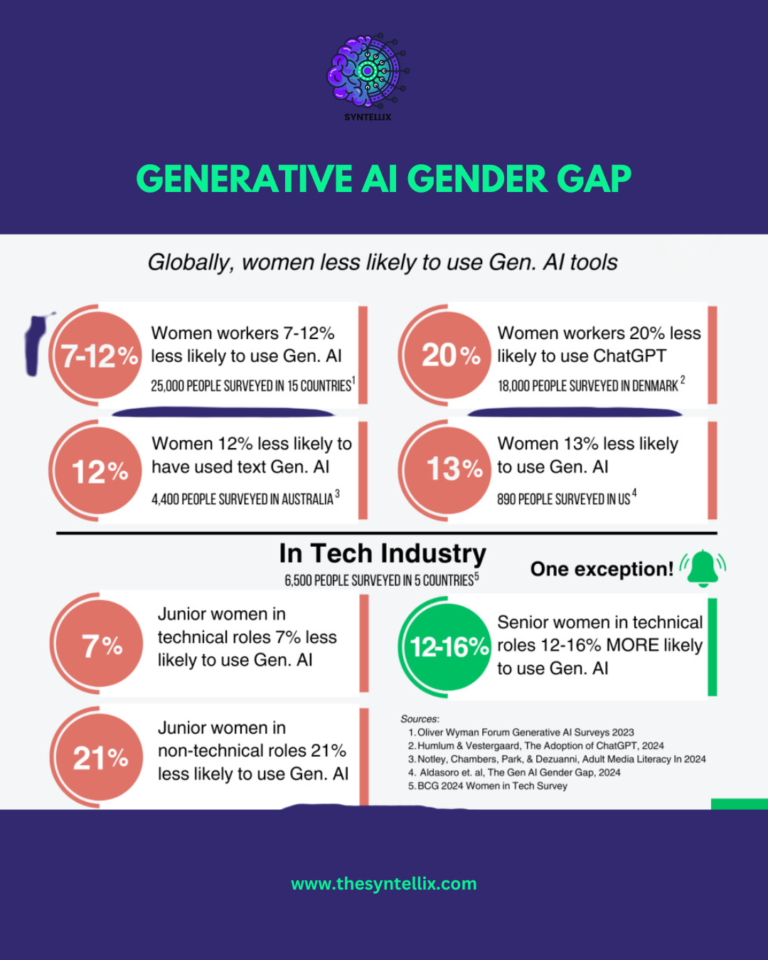

It’s one thing to have a personal feeling about this issue, but my own experience has been backed up by some powerful research. I was particularly struck by a study from Harvard Business School that found a consistent gender gap in how people are adopting generative AI.

They examined data from 18 different studies, covering more than 140,000 users from all over the world. What they found was something I had suspected: women use tools like ChatGPT and Claude about 20% less often than men. This gap showed up across different regions, jobs, and education levels.

This finding was a real wake-up call for me. It’s not just a matter of availability—the study showed the gap persisted even when men and women had equal access to the tools.

It suggests that the problem is more complex. It’s about whether the tool feels relevant and useful to you. Could it be that women are using these tools less because the results are less accurate or beneficial for their specific needs? I think so. If I’m constantly having to correct biased outputs, I’m going to be less inclined to use the tool in the first place.

The study made a compelling argument: to close this gap, we need to go beyond simply providing access. We need to boost confidence, offer tailored training, and actively work to change cultural attitudes around AI. This is a powerful truth that I’ve seen play out in my own life. If the technology feels like it was designed without my perspective in mind, I’m naturally going to be less engaged with it. This Harvard data gave me the authority to speak about my own frustrations, knowing they are part of a much larger, globally documented trend.

Why the Gen AI Gender Gap Exists

Digging deeper, I’ve realized that the gen AI gender gap is not one single problem but a complex mix of systemic issues. It all boils down to two key factors: a lack of diversity in the people who build AI and the biased data they use to train it.

Let’s start with the data. The models learn from the collective human knowledge found online—websites, books, articles, and social media. The problem is, this digital library isn’t a neutral representation of humanity.

It’s a mirror of our society, with all of its existing biases, including gender stereotypes. Much of the content we’ve created over decades has been written from a male perspective or has reinforced traditional gender roles. It’s no surprise, then, that an AI trained on this data will pick up on those patterns. The AI isn’t inherently biased; it’s simply reflecting the bias it sees in the world. I’ve seen it myself in the subtle ways AI will refer to a woman as “the mother” or “the wife” even when those roles aren’t central to the story. It’s a small detail, but it speaks volumes about the data it’s learned from.

Then there’s the issue of the tech industry itself. While we’ve made progress, women and other non-binary individuals are still heavily underrepresented in AI research and development roles.

The teams making the most crucial decisions about AI’s purpose, functionality, and ethical guidelines often lack diverse perspectives. When a team is not reflective of the population it serves, it can unintentionally overlook biases, create blind spots, or fail to prioritize fairness in the AI’s design.

To learn more about what are AI Bias read my guide: The AI Bias Handbook

I’ve seen this happen when an AI tool is great at understanding one type of English accent but struggles with others, or when a facial recognition tool has a hard time identifying darker skin tones. These are not technical failures; they’re failures of diversity.

In my view, the gen AI gender gap is a powerful feedback loop. The industry lacks diversity, so it creates AI with a limited perspective. That AI, in turn, reflects the biases of its training data, which then creates a product that is less useful for a diverse audience. The result? The gender gap persists, and we all lose out.

Why Diversity Matters for the Future of AI

When I first started to see this problem, I thought it was just a minor inconvenience for me as a content creator. But the more I’ve learned, the more I’ve realized that closing the gen AI gender gap is essential for all of us.

The content it generates will feel narrow, maybe even stereotypical, and it will lack the nuance that makes great content so powerful. For me, it means more time spent correcting its work and less time on the creative process. It means I have to be the one to inject the diversity and perspective that the AI is missing.

But when we actively build diverse teams and use inclusive data, the AI becomes a much more balanced and capable tool. It understands a wider range of viewpoints and can generate content that is more respectful and representative of a global audience.

I’ve personally used AI models that are built with more diverse data, and the difference is incredible. The language is more inclusive, the imagery is more varied, and the overall output feels more authentic and human. The AI becomes a partner in my creative process, not just a tool that needs constant supervision.

Ultimately, diversity makes generative AI more human-like, not just in how it writes or speaks, but in its ability to treat people fairly and with wisdom. It’s a win-win: it’s the right thing to do from an ethical standpoint, and it creates a better, more powerful, and more profitable technology for all.

Initiatives Working to Close the Gen AI Gender Gap

Despite the challenges, I’m optimistic because I’ve seen a growing number of initiatives dedicated to fix this problem.

1. Educational Programs: This is where the work really begins. Organizations like AI4ALL and Girls Who Code are doing a fantastic job of providing AI education and mentorship to young people from underrepresented backgrounds. I’ve followed their work and seen the next generation of diverse AI professionals being inspired and empowered. They’re tackling the problem at the source by building a more inclusive talent pipeline.

2. Corporate Initiatives: It’s encouraging to see major companies stepping up. I’m aware of programs at Google and Microsoft that are specifically designed to support women in AI. Google’s “Women in AI” initiative, for instance, focuses on increasing female representation in research and development. These programs offer mentorship, funding, and a professional community, all of which are critical for attracting and retaining diverse talent.

3. Advocacy Groups: The grassroots movement is strong. Groups like Women in Machine Learning (WiML) and Black in AI provide essential networking opportunities, resources, and support for underrepresented groups. I’ve seen how these communities offer a place to share knowledge, find collaborators, and advocate for change from within the industry. They’re a powerful force for accountability and progress.

4. Policy Changes: I believe that for real systemic change, we need to see policy shifts. Governments and organizations are starting to push for more inclusive hiring practices to ensure that AI teams actually reflect the diversity of the populations they serve. This kind of top-down pressure, combined with grassroots advocacy, is what will ultimately close the gen AI gender gap for good.

My Tips for Creating Inclusive AI Systems

For me, this isn’t just about waiting for the industry to change; it’s about what I can do on my own. If you’re a creator, developer, or just a user of generative AI, here are some actionable tips I’ve learned from my own journey to help promote diversity and inclusivity.

1. Actively Seek Diverse Teams: My number one tip? Don’t settle for a homogenous team. If you’re building a new project or an AI tool, make sure you have people of different genders, ethnicities, and backgrounds on your team. It’s not just a matter of fairness, it’s a necessity for creating AI that can anticipate a wider range of user needs and biases.

2. Conduct Bias Audits (and Be Honest About Them!): This is so important. You have to regularly test your AI systems for bias and fairness. Use diverse datasets and make sure your testing teams are also diverse. Don’t be afraid to find problems; the goal is to fix them.

3. Use Inclusive Data: This is a big one. Make sure your training datasets are representative. I’ve seen firsthand how a small, unrepresentative dataset can lead to a lot of headaches down the road. If you’re building a language model, make sure it’s trained on content from a variety of voices and cultures.

4. Develop Ethical Guidelines: Every AI project I work on now starts with a clear set of ethical guidelines. I believe in creating and following ethical AI frameworks that prioritize inclusion and fairness. Make these guidelines a core part of your development process from day one.

5. Be a Mentor and a Supporter: If you have an opportunity, offer mentorship and resources to underrepresented groups in AI. I believe we all have a role to play in encouraging women and minorities to pursue careers in this field and providing the support they need to succeed.

The Future of Diversity in Generative AI

The gen AI gender gap is a difficult, complex issue, but I’m convinced it’s not something we can’t fix. I see signs of hope every day: more women are entering tech fields, companies are starting to talk about diversity in a meaningful way, and advocacy groups are making sure the conversation never stops.

There’s still a long way to go, though. Closing the gen AI gender gap will require a sustained effort, a commitment to collaboration, and a willingness from all of us to challenge the status quo.

The future of AI depends on the voices we include today. When we create technology that truly reflects humanity in all its beautiful diversity, we won’t just be building smarter AI; we’ll be building a better, more equitable world for everyone.

What do you think about the gen AI gender gap? Have you experienced its impact firsthand? Share your thoughts in the comments below—I’d love to hear your story!

People Also Ask

What percentage of AI is female?

AI itself does not have a gender, but when referring to gender representation in the AI workforce, women are significantly underrepresented. As of 2025, studies show that only around 22% to 26% of AI and data science roles are held by women globally. This gap is even wider in technical and leadership positions.

What are the gender issues with AI?

Gender issues in AI include bias in training data, underrepresentation of women in AI development, and the frequent design of AI systems that reflect societal stereotypes. For example, virtual assistants are often assigned female voices and roles, reinforcing traditional gender norms. Additionally, algorithms trained on biased data can result in discriminatory outcomes in areas like hiring, healthcare, and facial recognition.

Which gender uses AI the most?

There is no definitive global data on AI usage by gender, but usage tends to reflect broader tech access and digital behavior trends. In general, men are slightly more likely to adopt and experiment with emerging technologies, including AI tools, especially in professional and technical settings. However, this gap is narrowing as AI becomes more consumer-friendly and widely integrated across industries.

What does gen AI struggle with?

Generative AI (Gen AI) struggles with several challenges, including bias, hallucinations (producing false or misleading information), lack of true understanding, and context limitations. It also inherits and amplifies biases present in its training data, which includes gender, racial, and cultural stereotypes. Additionally, Gen AI often lacks ethical reasoning, making it risky in high-stakes decisions without proper oversight.

Stay ahead of the curve with the latest insights, tips, and trends in AI, technology, and innovation.