I still remember the first time I heard the term “deepfake.” It was back in 2017, and I was seeing these wild videos online. The technology seemed like something straight out of a sci-fi movie a bit of a novelty, maybe even a little creepy, but mostly just a fascinating new trick.

Little did I know, I was witnessing the birth of a major new field in synthetic media, one that would completely change how I view the digital world.

For a long time, I thought of deepfakes as a high-tech magic show. The name itself is a portmanteau of “deep learning” and “fake,” which perfectly captures what the technology does.

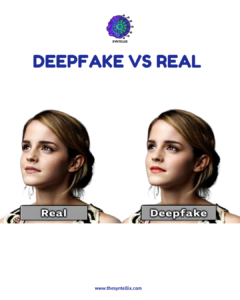

Using generative AI, it creates realistic video, audio, or images by swapping faces and mimicking voices. When you see a video of a celebrity saying something they never actually said, or a historical figure seemingly brought back to life, that’s a deepfake at work.

I’ve since learned that this technology is built on a foundation laid by decades of advancements, from video editing tools in the ’90s to the breakthroughs in neural networks that made face recognition possible. But it was only with the advent of generative adversarial networks (GANs) that deepfakes became so alarmingly convincing.

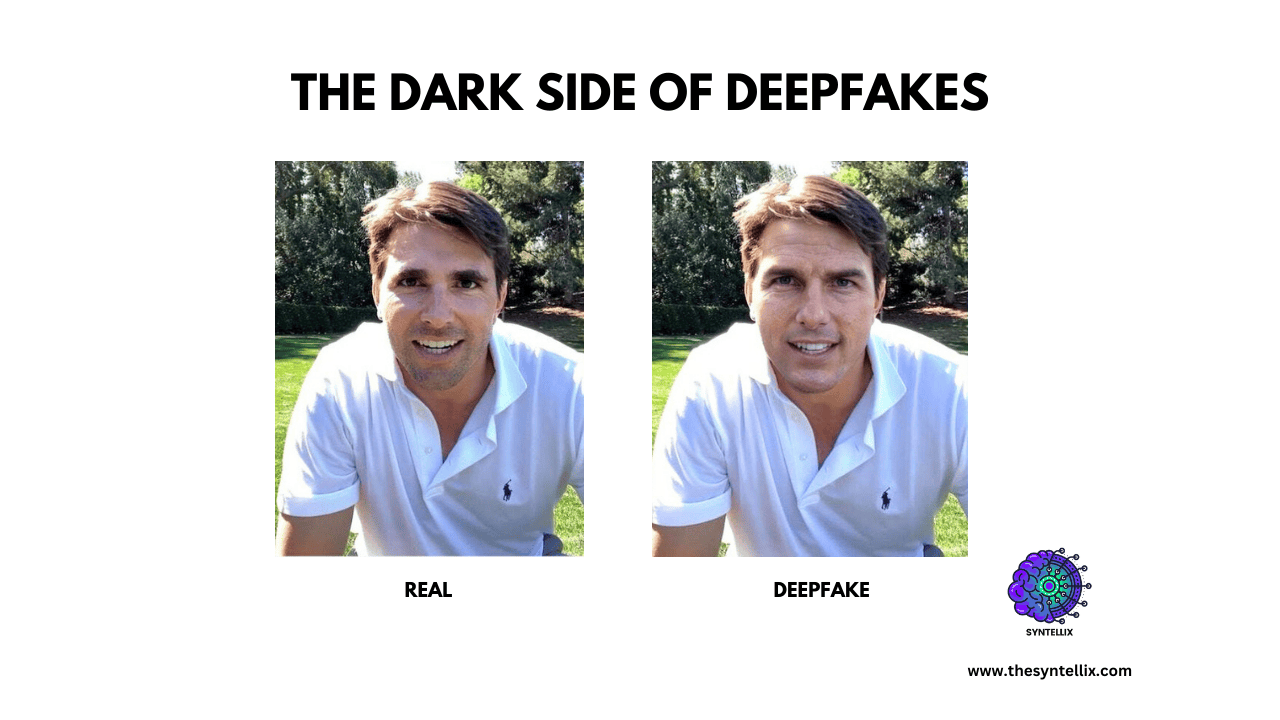

The Dark Side of Deepfakes

As I’ve followed the rapid development of this technology, I’ve had a sobering realization: the truly powerful side of deepfakes isn’t the fun stuff. The more I looked into it, the more I saw a dark, serious side to this seemingly benign tool. It’s a reality that’s increasingly challenging our trust in media, our institutions, and even each other.

For me, the most significant threat is the erosion of trust. In my work, I’ve seen how a single, convincing deepfake can wreak havoc. I remember following a story about a sophisticated voice clone that was used to scam a CEO out of hundreds of thousands of dollars the voice was so perfect, with all the right accents and cadence, that it was nearly impossible to distinguish from the real person.

In another instance, I saw a fake video of a political figure that went viral just before an election. Even though it was debunked by fact-checkers within hours, the damage was already done. That’s the power of this technology: it moves faster than we can correct it.

This brings me to a concept I’ve been thinking about a lot lately: “reality apathy.” It’s this feeling that’s creeping into our society where the constant barrage of misinformation, doctored videos, and fake news makes people just give up on trying to figure out what’s real.

When everything is a potential lie, what’s the point of believing anything? I’ve seen how this can fuel skepticism and even lead to a loss of faith in fundamental institutions like journalism and law enforcement.

And the misuse doesn’t stop there. I’ve been particularly troubled by how this technology has been weaponized against women. Early tools like DeepNude made it tragically easy for malicious actors to create non-consensual, fake explicit images. This isn’t just a technical issue; it’s a profound violation of privacy and dignity, causing real emotional and social harm.

Holding Technology Accountable

Given all of this, I started paying attention to the legal efforts to combat this. I’ve been following the proposed DEEPFAKES Accountability Act in the U.S. Congress, which I see as a crucial first step.

The idea behind it is simple: require creators to clearly label or watermark any AI-generated media. Whether it’s a video, an image, or an audio clip, the consumer should know it’s synthetic.

I believe that holding people accountable for removing those labels or intentionally misleading others is essential for preserving the integrity of our digital world. While the federal bill is still in a pending status, I’ve also noticed that many states are taking action, creating their own laws to address these issues.

The Bright Side of Deepfakes

It’s easy to focus on the negative, but I’m a firm believer that technology isn’t inherently good or bad it’s what we do with it that matters. I’ve also had the chance to see some truly inspiring and creative uses of this technology.

I was fascinated by the ad campaign that used a deepfake of David Beckham to have him speak about malaria in nine different languages, or how filmmakers are using it to de-age actors in movies. It’s a game-changer for accessibility, too, with voice cloning helping people with speech impairments communicate more naturally.

Ultimately, my experience has taught me that deepfakes aren’t just a gimmick; they’re a powerful tool with the potential to build or destroy. It’s on us to understand the technology and advocate for its responsible use. The more we learn, the better equipped we’ll be to navigate this new, synthetic reality.

What are deepfakes?

Deepfakes are fake videos, images, or audio created using artificial intelligence (AI) to make it look or sound like someone did or said something they never actually did.

Why are deepfakes considered dangerous?

Deepfakes can spread misinformation, ruin reputations, influence elections, cause public panic, and even create fake evidence in legal cases. They blur the line between real and fake, making it hard to know what to believe.

How can deepfakes affect politics?

Deepfakes can be used to create fake speeches or actions by politicians, which can mislead voters, damage trust, or even start international conflicts if believed to be true.

Can deepfakes be used to harm individuals?

Yes. Deepfakes are often used to create non-consensual explicit videos, cyberbully others, or blackmail people by placing their faces in fake but believable content.

How do deepfakes hurt journalism?

They can fool journalists into reporting false information or make real news look fake, which can undermine trust in the media and confuse the public.

Are deepfakes illegal?

Creating deepfakes is not always illegal, but it becomes illegal when used for harm—like fraud, harassment, or election interference. Laws are still developing in many countries to deal with deepfakes.

Can deepfakes threaten national security?

Yes. Deepfakes can be used for disinformation campaigns, cyber warfare, or to manipulate public opinion, all of which can threaten national security.

How can I spot a deepfake?

Look for things like blurry edges, strange blinking, unnatural movements, or inconsistent lighting. But keep in mind, deepfakes are getting more realistic, so it’s not always easy to tell.

What should I do if I see a harmful deepfake?

Report it to the platform where you found it (like YouTube or Facebook), and if it’s serious (like harassment or impersonation), report it to authorities as well.

Stay ahead of the curve with the latest insights, tips, and trends in AI, technology, and innovation.