I’ve been tracking the world of AI for a while now, and I used to think of it in a pretty straightforward way: as a powerful tool for automation and data analysis. But over the past year, I’ve been blown away by the incredible leap forward in what I call the “most interesting models.”

It’s one thing to hear the news that AI is expected to add over $15 trillion to the global economy by 2025. It’s another thing entirely to see these models in action—writing music, painting breathtaking art, and even helping diagnose diseases. These are tasks we once thought were reserved for the human mind, and yet, I’m seeing AI tackle them with surprising brilliance.

This isn’t about just saying “AI is cool.” It’s about a model’s ability to blend powerful technology with tangible, real-world impact. So, what makes a model truly interesting? For me, it comes down to a few key factors:

-

Creativity: Can it produce something genuinely novel, like a new song or a piece of art that feels original?

-

Accuracy: Does it solve complex problems with minimal errors?

-

Scalability: Can it be applied to different industries and a wide range of tasks?

-

Impact: Does it genuinely help people, solve problems, or make life better?

With those questions in mind, I want to share my top five most interesting AI models that are making waves right now.

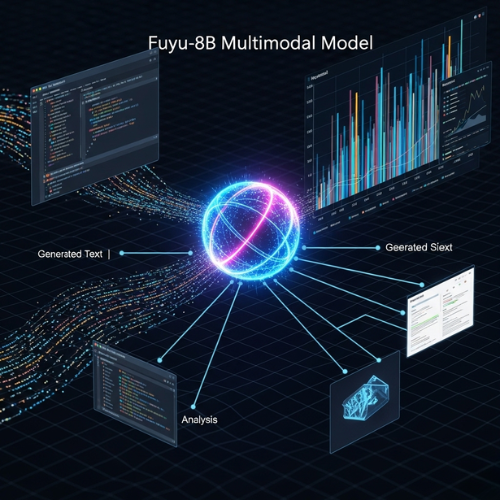

1. Fuyu-8B – Perplexity AI’s Native Multimodal Model

When I first came across Fuyu-8B, I was skeptical. So many models claim to be multimodal, but they often feel like text models with an image feature tacked on. Fuyu-8B, however, was a different story. It was designed from the ground up to handle both text and images simultaneously, and you can feel that difference in how it works.

Developed by Perplexity AI, this 8-billion parameter model is incredibly fast and efficient while still being capable of advanced reasoning across modalities. It can interpret complex image inputs like charts, screenshots, or even memes and respond with contextually aware answers.

I was particularly impressed with its ability to handle document analysis and UI interpretation—real-world tasks that are a massive headache with other models. Its native multimodal design truly makes it one of the most interesting models in current development.

Key Features that Impressed Me:

True Multimodal Capabilities: It processes both images and text seamlessly.

No Vision Tokenizer: It processes image patches directly, which simplifies the architecture and reduces latency.

Efficient and Accessible: With only 8 billion parameters, it balances performance and efficiency perfectly.

Real-World Training: It was trained on real-world data, which gives it a practical understanding of everyday contexts.

To know about perplexity AI read my review: Perplexity AI – Brief Overview & Q/A

2. ERNIE Bot – Baidu’s Answer to Generative AI

I have to admit, I was curious about Baidu’s ERNIE Bot. As a global model, I’ve used GPT-4 and Claude extensively, but I knew I was missing something by not exploring the Chinese-centric models. ERNIE Bot, built on the ERNIE architecture, changed my perspective.

Unlike models that just learn from plain text, ERNIE integrates structured knowledge into its deep learning process. It incorporates semantic facts and relationships, which I believe makes its responses more informed and accurate.

What truly sets it apart, though, is its deep understanding of the Chinese language and cultural context. It’s not just a regional player; it’s a leader that’s showing how powerful generative AI can be when it’s localized without sacrificing power or scalability.

Key Features that Impressed Me:

Knowledge-Enhanced Pretraining: It integrates structured knowledge from encyclopedias, giving it a much deeper understanding of relationships between entities.

Continual Learning: It can update its knowledge as new information becomes available, which is a huge advantage over static models.

Strong Performance: Its knowledge-driven design helps it perform incredibly well in real-world applications.

3. WizardLM 2 – A Smarter Instruction Follower

For a while, I’ve been looking for an open-source model that could truly follow complex instructions. That’s when I found WizardLM 2. This model doesn’t just predict the next word; it understands and responds to multi-turn instructions with incredible logical depth.

I found it particularly useful for scenarios requiring step-by-step reasoning or detailed explanations, making it a valuable tool for researchers and educators. What’s truly great about it is that it’s based on open weights, so anyone can fine-tune or adapt it. It’s an excellent example of how open AI can still push boundaries and compete with larger, proprietary systems.

Key Features that Impressed Me:

Instruction-Following Expertise: It excels at tasks that require detailed breakdowns and logical thinking.

Evol-Instruct Tuning: It was trained on instructions that increase in complexity, making it more capable of understanding nuanced commands.

Open Source: Being open-source makes it incredibly accessible and adaptable for a wide range of uses.

4. GNoME – Google DeepMind

This model truly blew me away. GNoME (Graph Networks for Materials Exploration) isn’t just about processing data; it’s about actively discovering new materials. It uses deep learning to predict the stability of crystal structures that have never been made before.

Think about that for a second. It can essentially “imagine” new chemical compounds and determine if they’re viable in the real world. In recent studies, it helped identify over 2 million potential new materials—a major leap for materials science where traditional trial-and-error can take years. I see GNoME as a brilliant example of how AI is no longer just a tool for creating text or images; it’s actively expanding the frontiers of human knowledge and accelerating scientific progress.

Key Features that Impressed Me:

Scientific Discovery: It helps discover new, stable crystal structures.

Massive Materials Discovery: It has predicted over 2.2 million new crystal structures.

Energy-Efficient: By predicting which materials are worth simulating, GNoME saves years of experimental work and reduces computational costs.

5. Grok 3

I’ve been following Grok’s development closely. Grok 3 stands out because it combines smart learning with a deep understanding of human language.

It learns from real-world data in a way that feels natural and flexible, which means it can understand not just words but also the meaning behind them. This is what makes a conversation with it feel smoother and more helpful.

What I find most interesting is its quick adaptability and ability to handle multiple tasks, whether it’s answering customer questions, analyzing data, or giving personalized advice. The fact that it can explain its decisions and improve over time by learning from new information makes it an incredibly promising and interesting model.

Key Features that Impressed Me:

Advanced Language Understanding: It understands the subtle meaning behind human language.

Context Awareness: It considers the situation and tone to provide personalized responses.

Multitasking Capability: It can handle various inputs, including text, voice, and data.

Continuous Learning: It gets smarter over time by learning from new information.

What’s Next?

As I reflect on these models, it’s clear that we’re standing at the edge of a generative AI renaissance. Each one brings a different strength to the table—speed, depth, or the ability to rewrite the future of science. Staying informed about these models is essential for anyone who wants to be part of what’s next.

So, which of these models attracts you the most? I’d love to hear your thoughts in the comments!

People also ask

What is the most powerful AI model in 2025?

As of 2025, Grok-3 by xAI and GPT-4.5 (or its successor under OpenAI) are widely recognized among the most powerful AI models. However, models like Google DeepMind’s GNoME for scientific discovery and Anthropic’s Claude 3.5 are also leading the field in specialized tasks. The most powerful model depends on the use case—whether it’s general language understanding, coding, scientific research, or multimodal reasoning.

What are the trends for AI in 2025?

AI trends in 2025 are focused on intelligent automation, multimodal AI, AI governance and regulation, and personalized AI assistants. We’re also seeing growth in AI TRiSM (AI trust, risk, and security management), ethical AI design, AI in drug discovery, and low-code/no-code AI platforms. Another notable trend is the shift from model development to real-world integration in industries like healthcare, finance, education, and logistics.

Which is the best AI tool in 2025?

The “best” AI tool depends on your needs. For general productivity and content generation, ChatGPT (Pro version with GPT-4.5 or newer) is leading in accessibility and capabilities. For research, Claude 3.5 by Anthropic is praised for its nuanced reasoning. For developers, tools like GitHub Copilot X and Replit AI are enhancing coding efficiency. In creative fields, Runway AI and Sora by OpenAI are revolutionizing video and media generation.

What will be predicted in 2025 for AI?

Experts in 2025 predict that AI will become more embedded in everyday life, not just as a tool but as a collaborative assistant across industries. It’s expected to drive more autonomous decision-making, optimize supply chains, revolutionize customer service, and even reshape education. AI safety, regulation, and ethical development are also central themes, with global frameworks being developed to ensure responsible use.

Stay ahead of the curve with the latest insights, tips, and trends in AI, technology, and innovation.

Top 10 Interesting AI Models of 2025: Innovations Shaping the Future – The Syntellix

asbmiplvzq

sbmiplvzq http://www.g7xnl7fxzis2968a452kj670l7237kwus.org/

[url=http://www.g7xnl7fxzis2968a452kj670l7237kwus.org/]usbmiplvzq[/url]