I’ve spent a lot of time exploring the world of AI, and I’ve found that it’s a topic that’s often misunderstood. When I first started, my head was spinning with terms like “machine learning” and “neural networks.” But nothing was more confusing to me than the concept of Artificial General Intelligence (AGI).

I used to think of it as pure science fiction, but the more I’ve learned, the more I realize it’s a serious goal that researchers and data scientists are working toward. I want to share my own journey of understanding AGI, from my initial confusion to a clearer picture of what it is and why it matters.

My First Question: Is Today’s AI an AGI?

When I first started to see things like ChatGPT, which could write poetry or even hold a conversation, my first instinct was, “Wow, we’ve done it! This must be AGI!” But as I learned more, I came to realize I was missing a crucial piece of the puzzle. What we have today, like the AI that recommends movies on Netflix or the one that drives a car, is called Narrow AI.

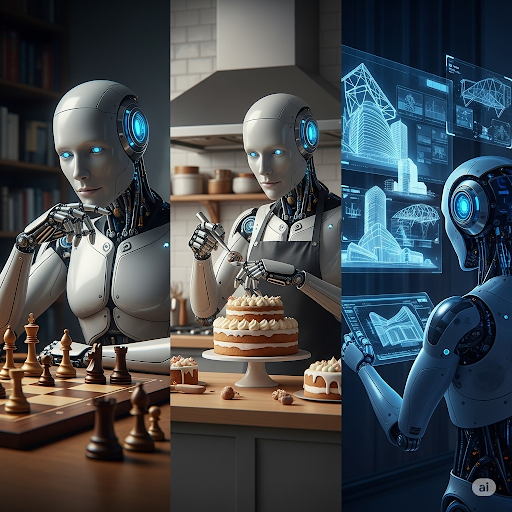

I like to think of Narrow AI as a brilliant specialist. A chess AI can beat any human in the world, but if you ask it to write a poem, it’s completely useless because that’s not its purpose. It’s a master of one craft, and that’s it. My music app knows exactly what songs I’ll like, but it can’t make me a cup of coffee. It has a very specific job and it does it incredibly well.

AGI, on the other hand, is the ultimate generalist. It’s the kind of AI that could learn to play chess, then turn around and learn to bake a cake, and then design a building—all without being specifically programmed for each of those tasks. This is the key difference I had to grasp: it’s not about doing one thing well; it’s about being able to learn and adapt to anything just like a person can.

The Capabilities of AGI ( How Close We Are To AGI)

So, if we ever get to a point where we have a true AGI, what would it actually be able to do? Based on my research, here’s what I’ve come to understand.

- Learn Anything: An AGI wouldn’t be limited to what its creators taught it. It would have the ability to learn any skill or piece of information a human can, and probably much, much faster. Imagine a single AI that could be your personal math tutor, a chef, and a pilot all in one. That’s the kind of learning ability we’re talking about.

- Understand New Situations: Unlike today’s AI which can only function within its pre-programmed rules, an AGI would be able to adapt to a brand new situation. Think about an AI that’s never been to the beach. An AGI could understand what it is, what activities are possible there, and how to behave safely without a single line of new code.

- Reason and Make Decisions: An AGI would have the ability to reason, solve problems, and make complex decisions in ways that current AI simply can’t. Today’s AI systems follow logical rules, but an AGI could reason with nuance, considering ethical implications or creative solutions that aren’t obvious.

- Work Like a Human (or Better): An AGI could perform a huge range of tasks. It could paint, write, teach, and create with human-like skill, but with the added benefits of not getting tired or making human errors. It could work tirelessly for weeks or months on a single problem, pushing past the limits of human concentration.

- Keep Learning on Its Own: An AGI would be autonomous. It would learn from its own experiences and interactions, constantly improving and evolving without human intervention. This is what makes the concept both so exciting and a little bit daunting.

AGI vs. Narrow AI

To really drive home the difference, I found that comparing them in a simple table was the best way to keep it straight in my mind. Here is a clear and concise comparison between AGI and Narrow AI:

| Aspect | Narrow AI | AGI (Artificial General Intelligence) |

|---|---|---|

| Scope | Task-specific (e.g., playing chess, facial recognition) | General-purpose (can perform any intellectual task a human can) |

| Learning Ability | Learns only within a specific domain | Learns and adapts across multiple domains |

| Flexibility | Cannot generalize knowledge | Can apply knowledge to new, unrelated tasks |

| Examples | Siri, ChatGPT, self-driving cars | Hypothetical systems (e.g., human-like robots) |

| Current Status | Widely used in real-world applications | Still theoretical, not yet achieved |

| Goal | Optimized for specific tasks | Mimics human-like reasoning and problem-solving |

The Technologies That Might Get Us There

So, how would we even build something like this?

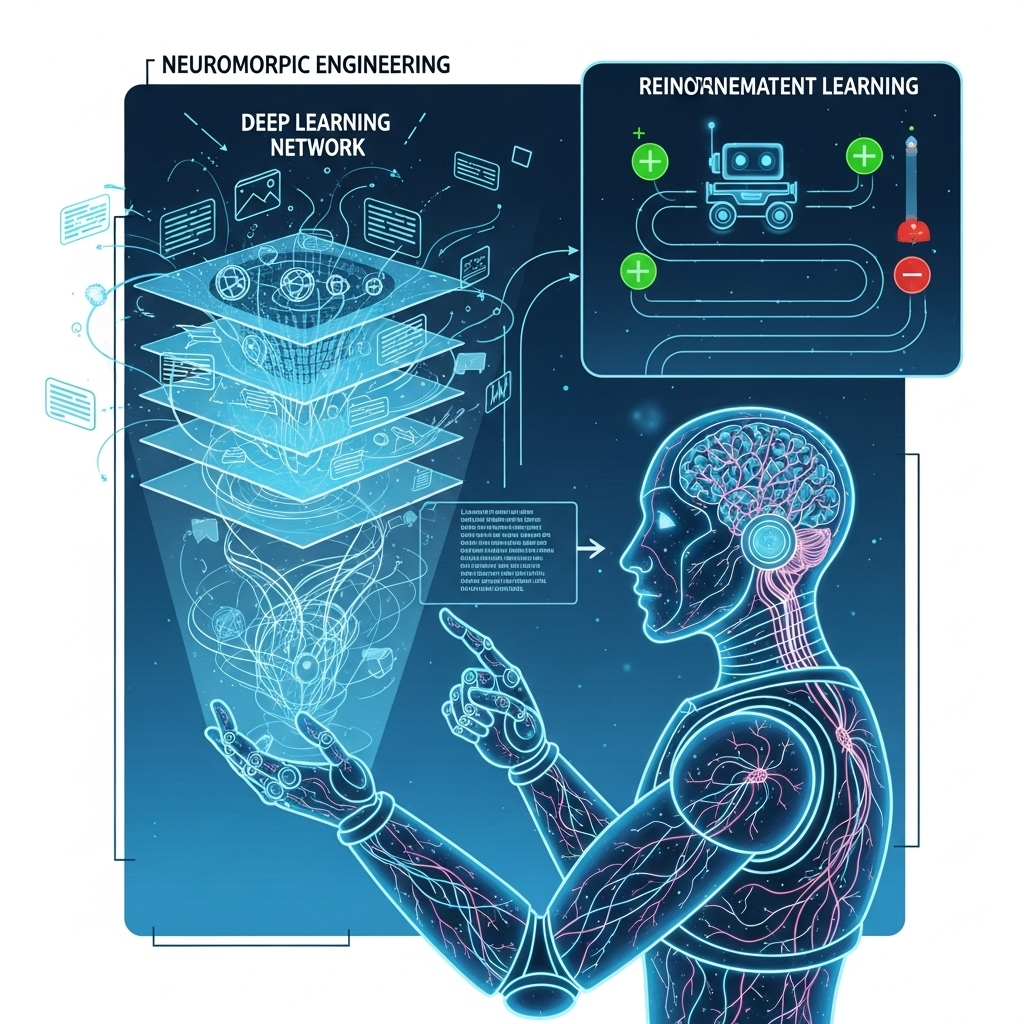

- Deep Learning: It’s the technology that has given us things like advanced image recognition and language models. The idea is to use increasingly complex neural networks to allow machines to learn from massive amounts of data in a way that’s similar to how we think.

- Reinforcement Learning: This is a cool concept. It’s a method of training an AI by rewarding it for good decisions and penalizing it for bad ones. I think of it as training a dog to do a trick—when it does it right, you give it a treat. This approach could teach an AGI to make complex, multi-step decisions in the real world.

- Neuromorphic Engineering: This sounds complicated, but it’s really about building computer hardware that’s designed to mimic the architecture of the human brain. The idea is that if the hardware looks more like a brain, the software that runs on it might be more human-like as well.

The Challenges That Keep Me Up at Night (in a Good Way)

Despite all the incredible research, I’ve come to realize that AGI is still a long way off. There are huge hurdles that we, as a species, need to overcome first.

- Understanding Our Own Brains: We still don’t fully understand how our own brains work. We have no idea how we’re able to reason so effectively, be creative, or make common-sense decisions. It’s tough to build something you don’t fully understand.

- Safety and Ethics: This is the most important part for me. How do we ensure that an AGI, with a level of intelligence far beyond our own, is safe and aligned with human values? It’s a problem we have to solve before we get there.

- Computational Limits: An AGI would require an immense amount of computing power—more than we currently have. It’s a logistical challenge that will require massive breakthroughs in hardware technology.

- Generalization: This is still the biggest hurdle. Teaching a machine to generalize knowledge from one task to a completely new one is incredibly difficult, and we haven’t found a reliable way to do it yet.

The Future I Envision

So, when will AGI become a reality? I’ve seen so many different answers from experts. Some believe it could be as soon as 2040, while others think it’s still centuries away. The truth is, no one knows for sure.

What I do know is that the journey to AGI will be filled with amazing breakthroughs and huge questions. I’ve become fascinated by the concept of the singularity—the hypothetical moment when AGI surpasses human intelligence. While it’s a big, almost overwhelming idea, I believe it’s up to all of us to ensure that this future is one we’re all prepared for.

To learn about AI Singularity read my blog: How Close Are We To The Technological Singularity?

In my view, AGI is a vision of what machines could become. From solving global challenges to revolutionizing industries, it has the potential to transform our world for the better. But with that potential comes great responsibility. My hope is that as we get closer, we’ll ensure it’s developed ethically and used for the benefit of all.

So, what are your thoughts? Are you excited, cautious, or a mix of both? I’d love to hear your perspective!

Stay ahead of the curve with the latest insights, tips, and trends in AI, technology, and innovation.