Introduction

Nowadays, Artificial intelligence(AI) has become important part of our everyday lives, from job applications to medical diagnoses. The most important question to ask is AI treating everyone equally? or it is favoring one group over another based on race, gender, or background?.

AI systems learns and trains itself from data, in case if that data reflects human biases it can make unfair decisions.

Fairness in AI simply means that AI system works equally for well for everyone, without discrimination. In this post, we’ll break down what fairness in AI really means, why it matters, and how experts are working to build more just and reliable technology.

What Is Fairness in AI?

Fairness in AI means that the AI systems should make any decision fairly (unbiased) without giving one group an unfair advantage over another.

In simple terms, AI systems should apply the same relevant criteria for everyone so that factors like a person’s race or gender don’t unfairly treated.

The fairness in AI matters because AI systems now helps to decide jobs, loans and even medical care. So if an AI is biased, it could wrongly(unfairly) block some people from these opportunities.

For example, a hiring algorithm that is trained mostly on data from one group, might favor similar candidates and overlook equally qualified others. Likewise, experts note that a biased healthcare model can “lead to inequities” in patient care and that recruitment algorithms might favor one gender or race over another.

Researchers warn that built‐in biases can bias outcomes and weaken public trust, so fairness efforts aim to remove these biases and treat people as fairly as possible.

At the same way, researchers found AI-based loan tools that more often denied credit to Black people than to equally qualified white people.

Stories like these shake public trust: in a Pew survey 58% of Americans said they believe computer programs will always carry some human bias. To address this, governments are stepping in.

The EU’s new AI law (2024) explicitly requires high-stakes AI (like hiring or lending systems) to be non-discriminatory and even fines companies up to €35 million or 7% of sales if they break the rules. U.S. regulators have made the same point – there is “no AI exemption” to existing civil‑rights or fair‑lending laws. In short, fairness in AI means everyone is treated equally, and companies that ignore this rule can face heavy penalties.

Exploring Visualization for Fairness in AI Education

A group of researchers, led by Bei Wang, created a smart way to teach fairness in AI using visual tools. Let’s break down what they did and what we can learn from it.

Who Did This Research Help?

Most AI fairness tools are made for people who can code. But not everyone using AI is a programmer. This research was focused on nontechnical students, especially business majors, who will likely use AI tools in their careers. The researchers wanted to help these students learn to understand and question unfair AI behavior.

How Did They Teach Fairness? With Easy-to-Use Visuals

The team created six visual tools. Each one shows a different part of AI fairness using pictures and simple interaction, not code.

Here’s what each part does:

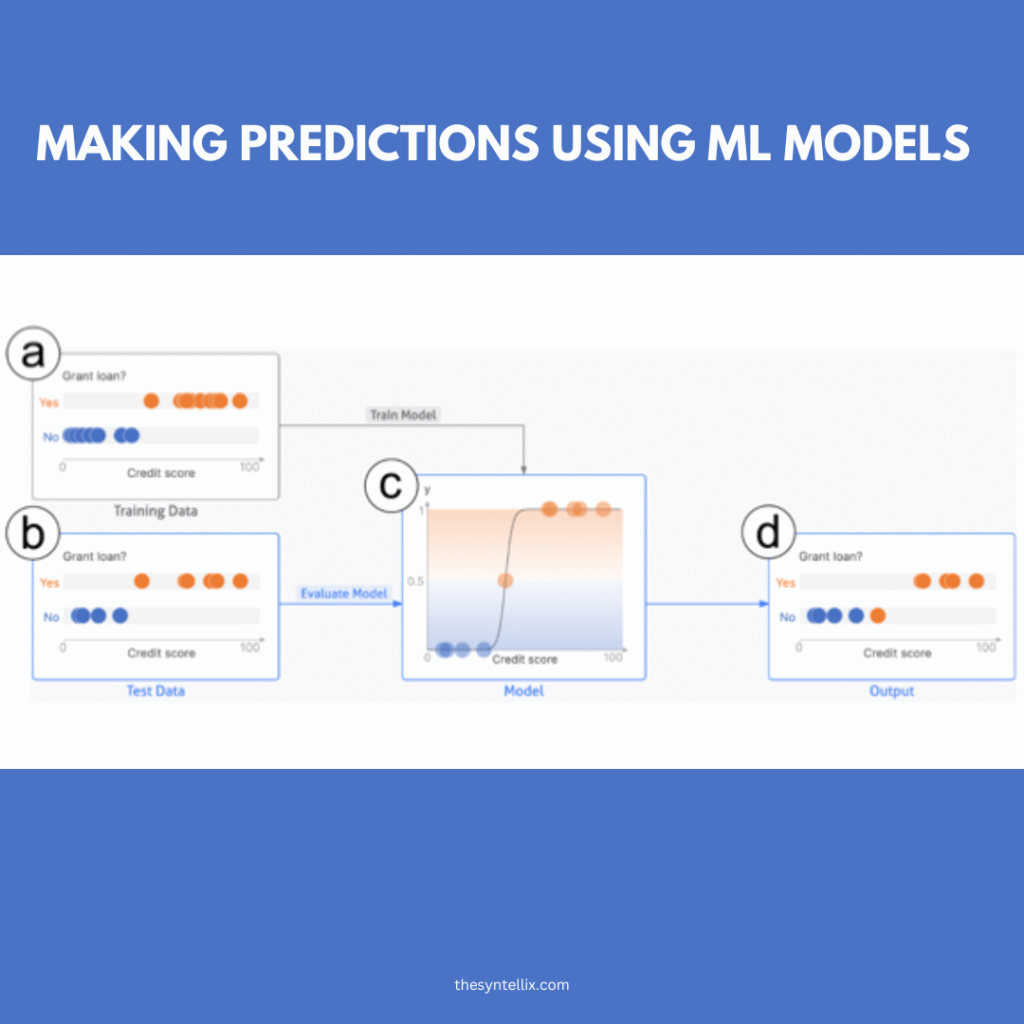

Machine Learning Steps (C1):

Shows how AI learns from data step by step.

Change the Data (C2):

Lets users try different training data to see how it changes the results.

Test by Group (C3):

Shows how the AI performs for different groups (like men vs. women).

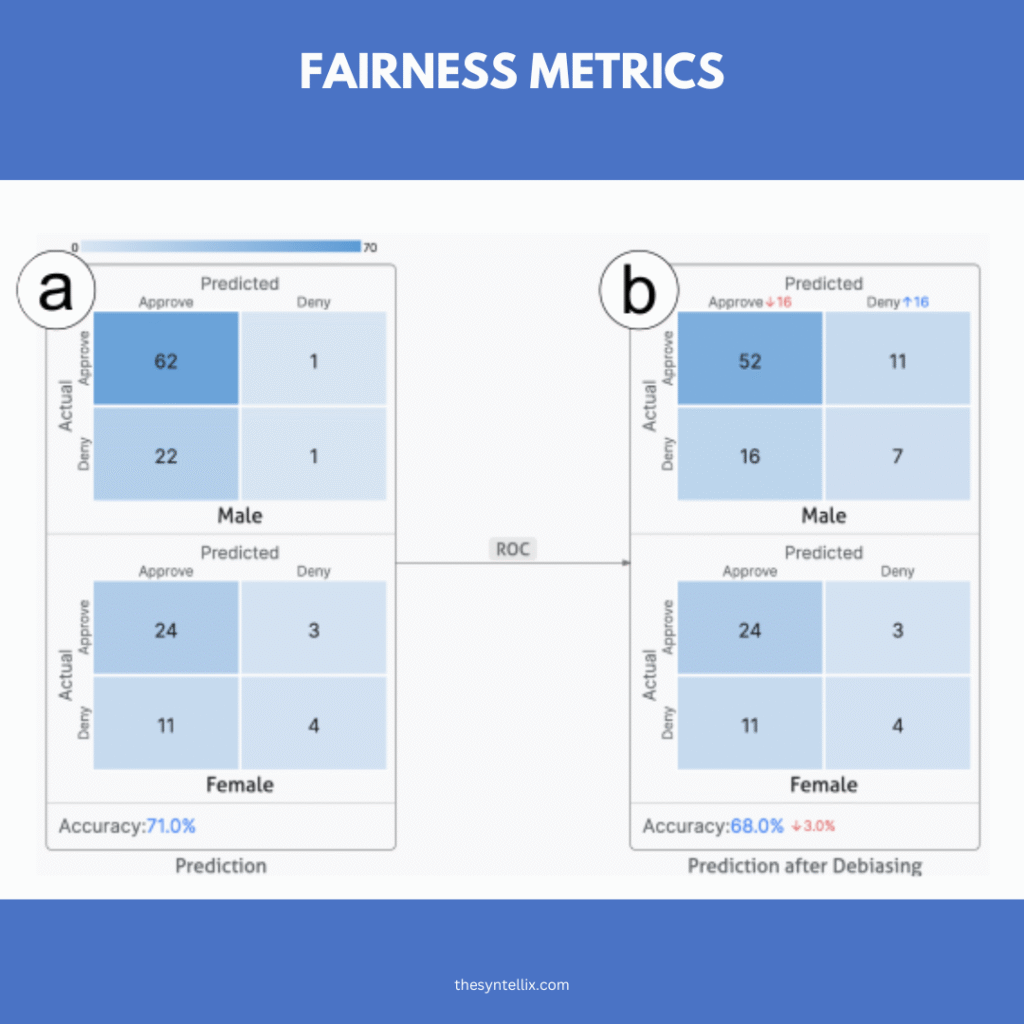

Compare Fairness Rules (C4):

Lets users compare different fairness rules, like equal treatment or equal chances.

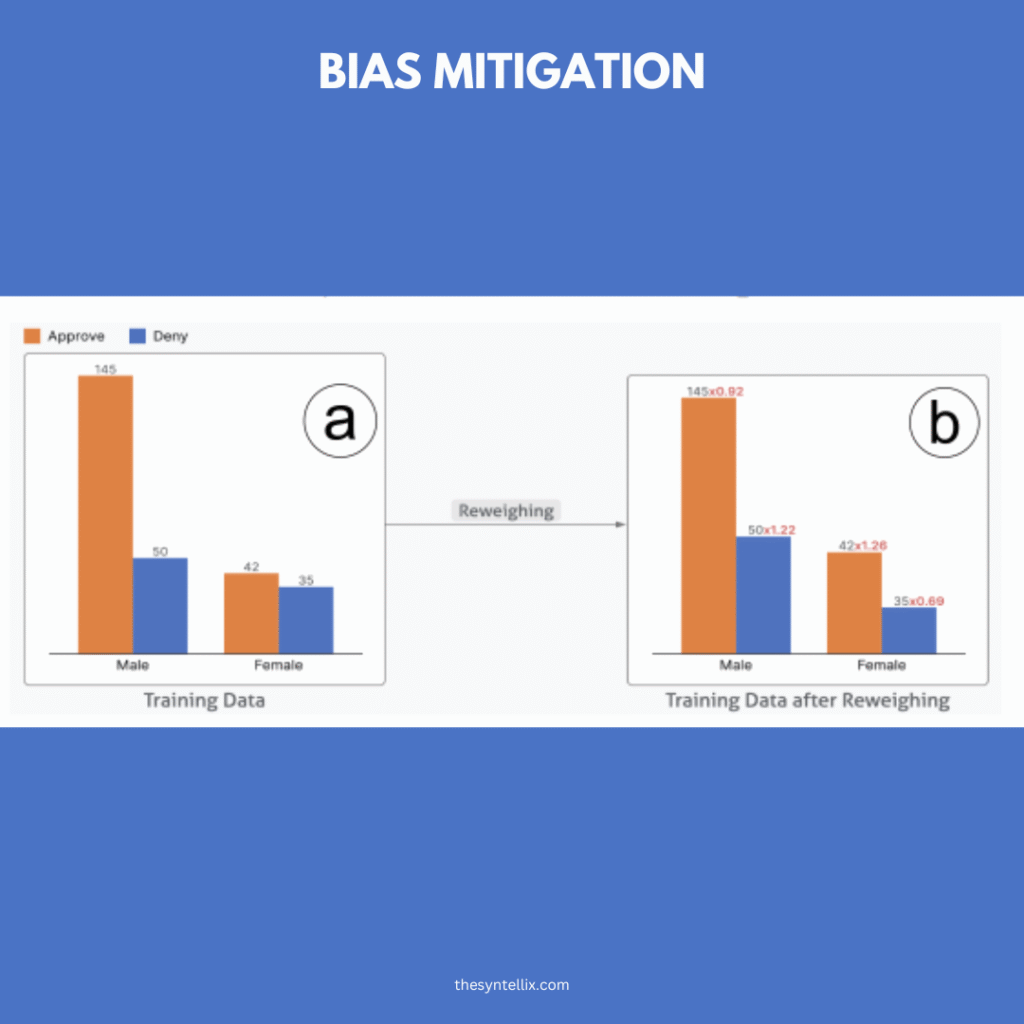

Fix Bias Before Training (C5):

Shows how cleaning data before using it helps improve fairness.

Fix Bias After Training (C6):

Shows how to adjust AI results after the model is trained.

Why Did These Visuals Work So Well?

The visuals made learning easier by:

- Keeping the design simple.

- Letting users explore by clicking and testing ideas.

- Explaining ideas with short, clear text.

- Avoiding confusing terms.

These tools helped students to see fairness problems instead of just reading about them.

What Did the Students Say?

Over 400 students tried these tools. Some read text only, some saw static pictures, and others used the interactive tools. The results were clear:

- Visuals worked better than text alone.

- Even simple images helped students remember fairness ideas.

- Most students didn’t explore every interactive part, but the visuals still helped them understand more.

What Can Be Better?

Some students felt the tools had too much information. Others didn’t know how to start using the interactive parts. The researchers saw a need to:

- Break the content into smaller pieces.

- Guide students more clearly through the tools.

These small changes could make learning even easier.

Why This Project Matters

Fairness in AI affects all of us. We need to teach it in a way that everyone can understand. This project shows that visuals and simple design can help people learn complex ideas even if they don’t know how to code.

When more people learn how AI works and how it can go wrong they can help to build better and fairer systems in the future.

Challenges in Ensuring Fairness

Achieving Fairness in AI system is tricky because people treat the word “fair” differently. For instance, one view says that AI systems should have equality which means that treating everyone with the same rule while another view says that AI systems should have equity which means that AI systems might need to give extra help to those who start at a disadvantage. here are some challenges to achieve fairness in AI systems.

1. Bias in Training Data

AI systems learns from data, so if past data is prejudice than the AI system might be trained on unfair data which leads to unfair decisions. Studies even find that many people have lost their jobs or loans because of biased algorithms, and experts point out that even if visible bias is removed, hidden bias often remains in these complex systems.

To address these issues, specialists recommend transparency and oversight. For example, using “explainable” AI systems (which clearly show how they decide) and clear rules to ensure fairness and accountability.

Solution: AI systems should use diverse, representative datasets for learning and training. Tools like IBM’s AI Fairness 360 help identify and remove bias in training data.

2. Fairness vs. Accuracy Trade-Off

The problem arises when the AI system try be fair (treating different groups equally) and highly accurate (making correct predictions) at the same time. These two goals is must have for the AI system but they may clash. Ensuring fairness can sometimes reduce a model’s accuracy.

For example, if an AI hiring system is trained on past data. It might be very good at picking candidates who do well on the job. If we then change the tool to give equal hiring chances to everyone (so it’s fairer), it may stop picking only the very top candidates from one group. This can make it slightly less accurate at predicting job success.

Solution: AI systems can balance fairness with performance using techniques like reweighting or adversarial debiasing.

3. Challenges in Generative AI

Generative AI tools, like ChatGPT, learns by analyzing large amounts of text written by people. Because of this, it can sometimes repeat unfair ideas found in that text such as linking certain jobs or behaviors with a specific gender or race. This can lead to biased or unbalanced responses.

To reduce this problem, developers should carefully test how the AI responds to different types of questions and situations. If they notice unfair patterns, they should adjust the training process, add more balanced examples, or set rules to help the AI avoid reinforcing stereotypes. These steps help make the technology more fair and respectful for everyone.

The Future of Fairness in AI

In the coming years, new technology and new rules will work together to make AI fairer and more trustworthy. Researchers and companies are building tools like explainable AI (software that explains its own decisions), bias-detection programs (that look for unfair patterns), and training methods designed to treat all groups equally.

Meanwhile, governments and international organizations are writing new laws and guidelines (such as the European Union’s AI Act and UNESCO’s AI ethics recommendations) that require AI systems to be clear about how they work and to treat everyone fairly. Together, these advances aim to help future AI systems serve people from all walks of life, treat everyone equally, and earn public trust by being open and unbiased.

Conclusion

Fairness in AI is not just a technical challenge, it’s a moral imperative. In 2025, as AI becomes more pervasive, ensuring equitable outcomes is critical for building trust and compliance. Developers must prioritize fairness measures, and users must demand transparency. Together, we can create AI systems that serve everyone equally.

Call to Action:

Audit your AI systems for bias using tools like Fairlearn. Support regulations like the EU AI Act and advocate for fairness in AI development.

FAQs (People Also Ask):

Q: What is fairness in AI?

A: Ensuring AI systems treat individuals and groups equitably, without discrimination or bias.

Q: What is one challenge in ensuring fairness in generative AI?

A: Generative AI can amplify biases in training data, leading to unfair or harmful outputs.

Q: What purpose do fairness measures serve in AI product development?

A: They ensure equitable outcomes, build user trust, and comply with legal requirements.

Useful Resources

Stay ahead of the curve with the latest insights, tips, and trends in AI, technology, and innovation.

1 thought on “Fairness in AI: Building Equitable and Trustworthy Technology in 2025”