Table of Contents

ToggleThe terms deepfake was discovered in 2017, which was the major advancement in synthetic media creation. This technology allows the manipulation and generation of ai video or audio using existing audio or videos using generative ai tools.

In this blogpost you will come know about what are Deepfakes and what is the dark side of deepfakes.

Lets gets started!

What are deepfakes?

We all have experienced the beginning and advancement in deepfake technology. During the 1990s and early 2000s, key developments in video editing and machine learning laid the foundation for modern deepfake technology.

Tools like Adobe Premiere (1991) and After Effects (1993) enabled advanced video manipulation techniques such as motion tracking and chroma keying.

At the same time, machine learning progressed with the rise of methods like Support Vector Machines (1995) and renewed focus on neural networks, especially for tasks like face recognition. These advancements act as a base for Deepfakes.

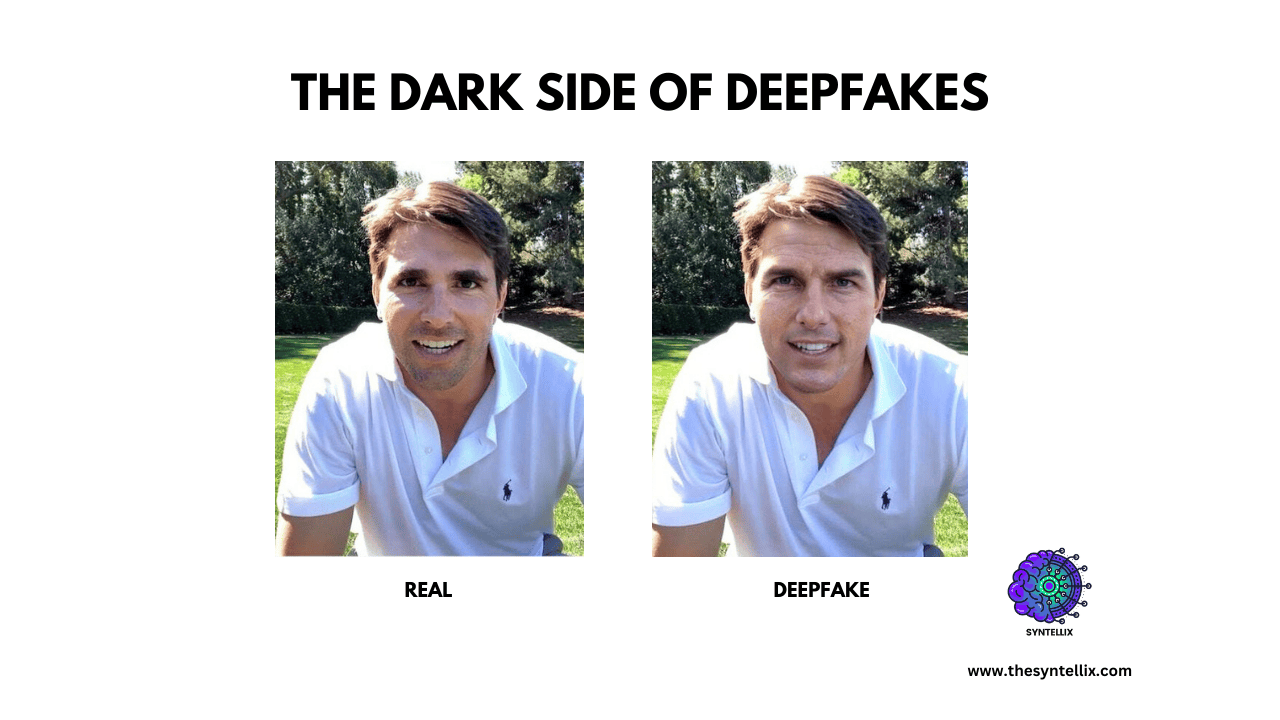

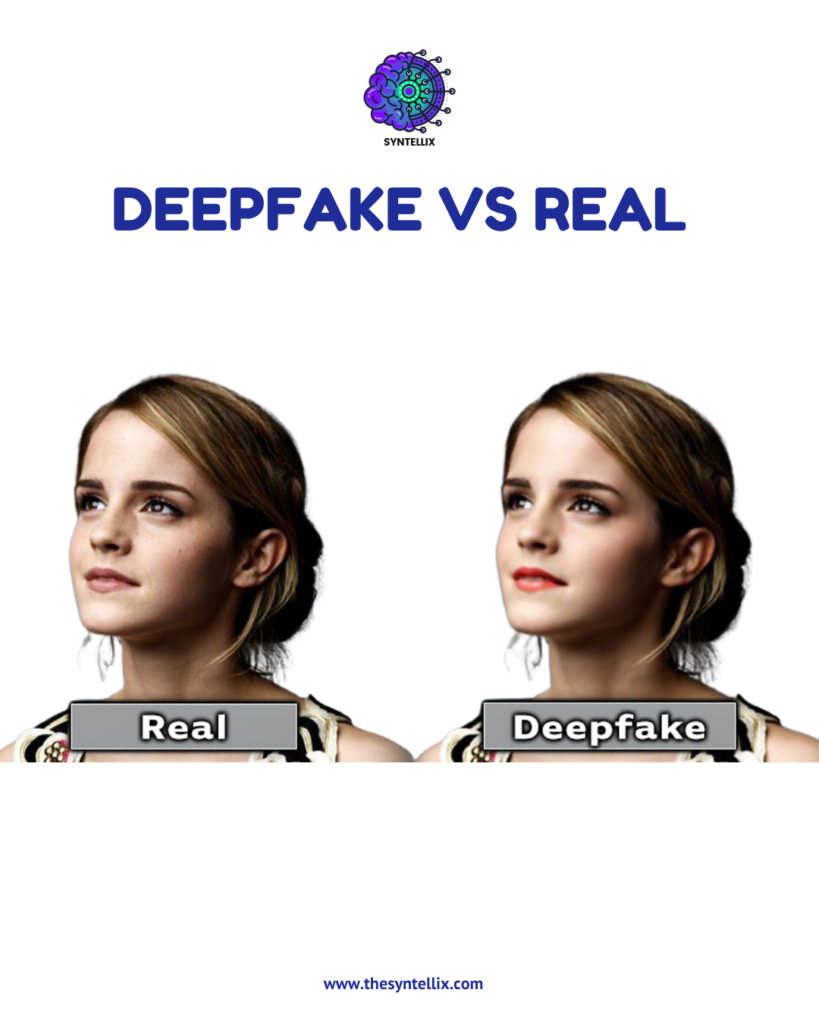

Deepfakes are the computer generated media (using artificial intelligence) in which the face and body movements in images and videos are swapped using gen ai. The term deepfake is the combination of word “deep learning” and “fake”.

The Dark Side Of Deepfakes Technology

While deepfakes have made headlines for their creative and entertaining uses, there’s a much more serious side to this technology that is increasingly concerning. As deepfake tools become more accessible, they’re being used in ways that challenge the trust we place in media, institutions, and even one another.

One major concern is the growing difficulty in telling what’s real from what’s fake. Deepfake videos and audio can mimic real people with alarming accuracy.

This makes it easier for bad actors to spread misinformation that looks authentic—whether it’s fake news reports, doctored political speeches, or impersonated voices in fraudulent calls.

For journalists, activists, and everyday users, verifying content has become more complex than ever.

In politics, deepfakes can be weaponized to damage reputations or mislead voters. A convincing fake video of a candidate saying something controversial can spread rapidly before fact-checkers have time to respond. Even if it’s later debunked, the harm to public perception may already be done.

This erosion of trust goes beyond politics. Deepfake content can weaken public confidence in courts, law enforcement, and news outlets.

When people begin to doubt everything they see or hear, it becomes harder to maintain shared truths. This growing skepticism is often referred to as “reality apathy,” where even genuine evidence is brushed off as fake.

A particularly harmful impact of deepfake technology has been seen in its misuse against women. One of the earliest notorious tools, DeepNude, allowed users to create fake explicit images of women using publicly shared photos. This opened the door to harassment, blackmail, and the spread of non-consensual content—causing real emotional and social harm.

The potential for fraud is another growing issue. With many apps now requiring users to verify identity through facial images or voice recordings, deepfakes can be used to bypass these checks. Criminals can impersonate someone using AI-generated visuals or sounds, making financial scams and data breaches easier to execute.

Journalism also faces challenges. Deepfakes can trick reporters into covering false stories, damaging their credibility. At the same time, real news can be discredited by those claiming it’s fake—further undermining the role of accurate reporting in society.

DEEPFAKES Accountability Act

To address these threats, the DEEPFAKES Accountability Act was proposed in the U.S. Congress between 2023 and 2024. This legislation aims to regulate deepfake content by requiring creators to clearly label or watermark AI-generated media. Whether it’s a video, image, or audio clip, the content must include a visible or audible indication that it is synthetic.

If someone removes these labels or shares misleading deepfakes without disclosure—especially with intent to defraud, mislead, incite violence, or influence elections—they may face legal penalties. These could include fines, jail time, or civil action from victims. The bill also requires software companies to support identification tools and directs federal agencies to monitor AI-generated threats.

In simple terms, the goal of the law is to protect people from being misled or harmed by unlabeled deepfakes—and to preserve the integrity of digital content in an increasingly AI-driven world.

The Positive Side of Deepfakes

Despite the growing concerns around misuse, deepfake technology also holds meaningful potential when applied responsibly.

In the entertainment industry, it’s opening new creative doors—allowing filmmakers to recreate historical figures, de-age actors, or even dub content seamlessly across languages without losing emotional nuance. This can enhance storytelling while reducing production costs and time.

In education and training, deepfakes can bring lessons to life. Imagine students learning directly from a realistic recreation of a historical leader or doctors practicing procedures in highly personalized simulations. It enables more immersive, engaging, and customized experiences.

Deepfakes are also being explored for accessibility. Voice cloning and facial animation technologies can help those with speech impairments communicate more naturally, or assist in creating real-time translations for global communication.

When governed with ethical safeguards and transparency, deepfakes can enhance industries, support innovation, and create more inclusive digital experiences.

What are deepfakes?

Deepfakes are fake videos, images, or audio created using artificial intelligence (AI) to make it look or sound like someone did or said something they never actually did.

Why are deepfakes considered dangerous?

Deepfakes can spread misinformation, ruin reputations, influence elections, cause public panic, and even create fake evidence in legal cases. They blur the line between real and fake, making it hard to know what to believe.

How can deepfakes affect politics?

Deepfakes can be used to create fake speeches or actions by politicians, which can mislead voters, damage trust, or even start international conflicts if believed to be true.

Can deepfakes be used to harm individuals?

Yes. Deepfakes are often used to create non-consensual explicit videos, cyberbully others, or blackmail people by placing their faces in fake but believable content.

How do deepfakes hurt journalism?

They can fool journalists into reporting false information or make real news look fake, which can undermine trust in the media and confuse the public.

Are deepfakes illegal?

Creating deepfakes is not always illegal, but it becomes illegal when used for harm—like fraud, harassment, or election interference. Laws are still developing in many countries to deal with deepfakes.

Can deepfakes threaten national security?

Yes. Deepfakes can be used for disinformation campaigns, cyber warfare, or to manipulate public opinion, all of which can threaten national security.

How can I spot a deepfake?

Look for things like blurry edges, strange blinking, unnatural movements, or inconsistent lighting. But keep in mind, deepfakes are getting more realistic, so it’s not always easy to tell.

What should I do if I see a harmful deepfake?

Report it to the platform where you found it (like YouTube or Facebook), and if it’s serious (like harassment or impersonation), report it to authorities as well.

Stay ahead of the curve with the latest insights, tips, and trends in AI, technology, and innovation.