You know, sometimes, the smartest solutions aren’t found in giant, complex supercomputers humming away.

Instead, they’re right there, buzzing, crawling, or flitting around us in nature, hidden in plain sight.

Have you ever just stopped and watched an ant colony in action? Or perhaps seen a massive flock of birds dance across the sky, turning and twisting as one without a single collision?

That, my friends, is Swarm Intelligence playing out right before your eyes. It’s absolutely captivating. We’re talking about intelligent swarming, where millions of tiny, simple individuals somehow create something truly brilliant and complex, all without a boss or a central plan. Pretty cool, right?

If you’ve ever found yourself asking, “Hey, what is swarm intelligence, anyway?” or “Just how does swarm intelligence work its magic?” then you’re in the perfect spot. We’re not just scratching the surface; we’re diving deep into everything from its fascinating history of swarm intelligence to its game-changing applications of swarm intelligence in today’s tech world. Trust me, you’ll want to read every word.

Lets explore!

Swarm Intelligence Overview

So, let’s get down to brass tacks: what is this “swarm intelligence” we keep talking about?

Imagine you’ve got a bunch of relatively simple guys – maybe they’re ants, maybe they’re birds, or maybe they’re just little bits of code floating around.

Each one follows a few straightforward rules, acts on its own, and only talks to its immediate neighbors or senses what’s happening right next to it.

When enough of these simple individuals start interacting in this local way, they suddenly, almost magically, produce unbelievably complex and intelligent behaviors as a group. No one’s in charge, no one’s giving orders from the top. It just… emerges.

That’s the essence of Swarm Intelligence (SI). It’s the collective smarts that bubble up from decentralized, self-organizing systems.

The idea of swarm intelligence was first discovered in 1980s when famous researchers like Gerardo Beni started studying how natural systems could inspire computing.

And if we see today, we can swarm intelligence everywhere from robotics to data analysis that makes systems more smarter, more adaptable, and more human alike in teamwork.

This isn’t just some cool academic concept; it’s literally inspiring the next wave of advancements in swarm intelligence in artificial intelligence. We’re talking about tackling problems that would make a traditional, single-brain AI scratch its digital head.

How Swarm Intelligence Works

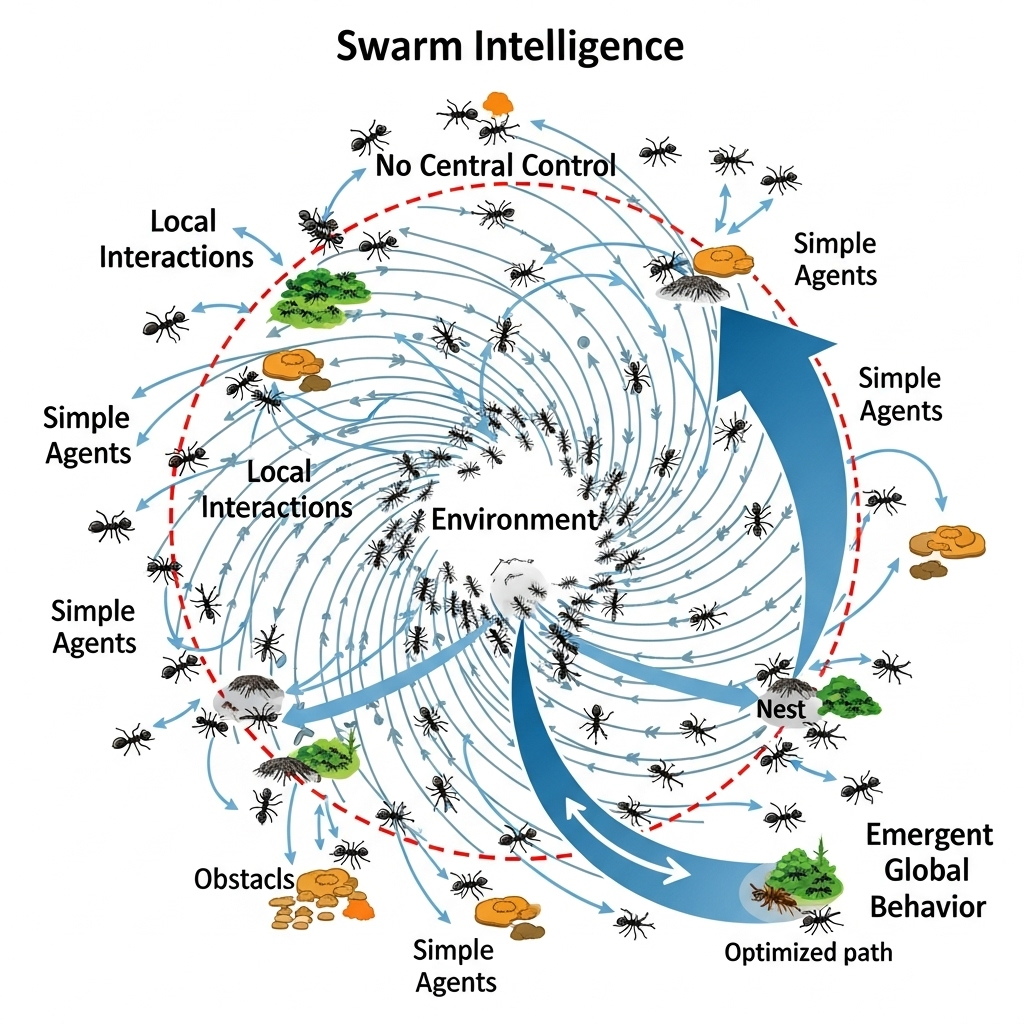

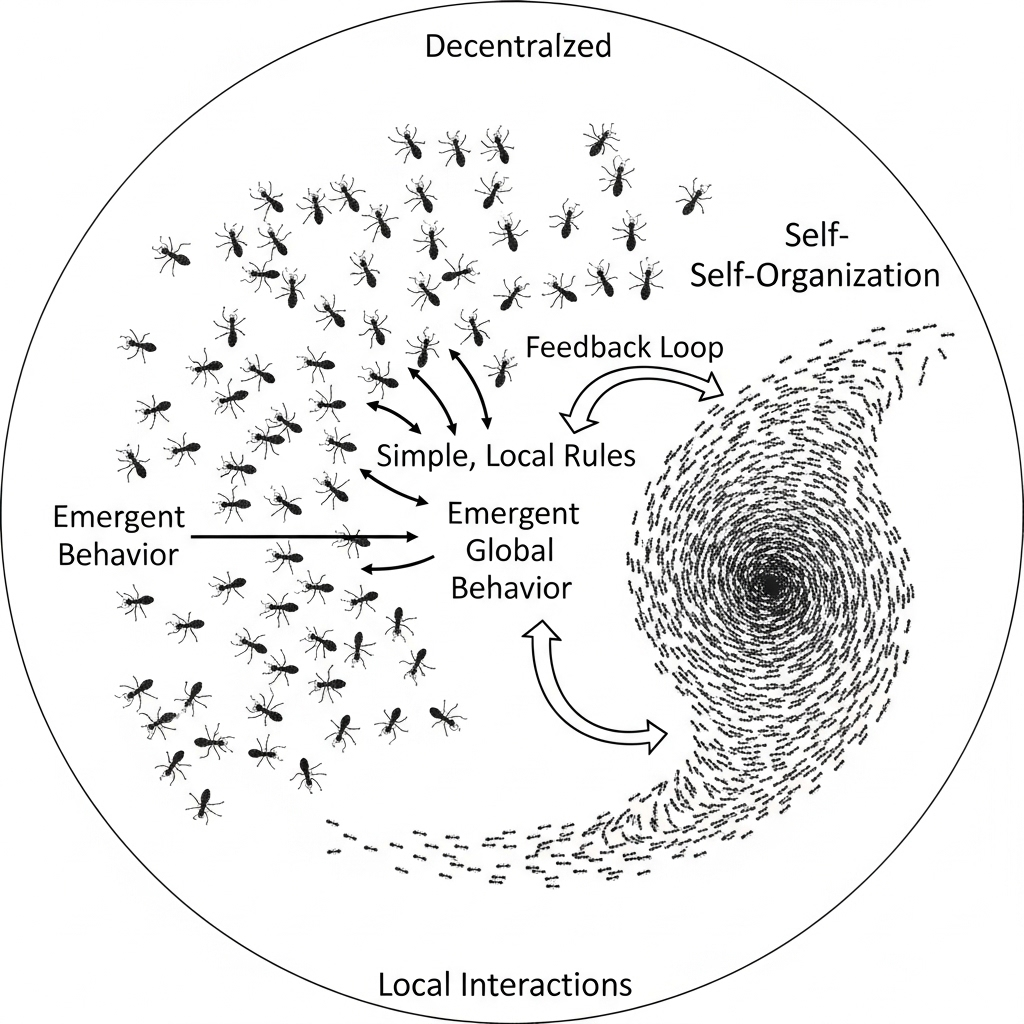

Okay, so if there’s no leader, how does any of this get done?

At its core, swarm intelligence operates on a principle that might seem counterintuitive at first: there is no central command.

Unlike a traditional military operation with a general dictating every move, a swarm functions without a leader, a blueprint, or even a global awareness of its mission.

Instead, each individual agent be it an ant, a bird, or a digital particle in an algorithm is designed to follow a set of incredibly simple, local rules.

Think of rules like “if you see food, pick it up and go home,” or “if your neighbor changes direction, follow suit.”

These agents don’t possess high-level reasoning or a grand strategy; their interactions are strictly confined to their immediate surroundings or their nearest peers.

Yet, from these myriad local interactions and basic rule-following, something truly profound occurs: complex, intelligent group behaviors naturally emerge.

It’s not programmed in directly; it simply arises from the bottom-up, as individuals collectively explore, share information (often through changes in their environment, like pheromones, or simple movements), and adapt.

This continuous exchange creates powerful feedback loops, where successful individual actions are amplified and propagated through the swarm, subtly guiding the entire collective towards optimal solutions, often far more efficiently and robustly than any single, highly intelligent agent could on its own.

The beauty of swarm intelligence really clicks when you grasp its foundational principles of swarm intelligence:

It’s Decentralized, Totally: There is no single point of failure here. Every agent (think individual ant or code particle) makes its own decisions. If one goes rogue or drops out, the rest just keep on trucking. That’s super important for building robust systems.

Self-Organization: Complex patterns and group behaviors just appear without anyone directly programming them in. It’s like a flash mob where everyone just knows the dance, without a choreographer shouting commands.

Local Interactions, Global Impact: Forget the need to see the whole picture. Each agent only cares about what its immediate buddies are doing or what’s happening in its tiny corner of the environment. Yet, these small, local interactions add up to massive, system-wide intelligence.

Emergent Behavior: What makes the swarm “intelligent” – like finding the absolute best route or perfectly optimizing something – isn’t something you install into each individual. Nope, it emerges from the combined efforts and simple rule-following of the entire group. It’s truly fascinating to watch.

Feedback Loops (Give and Take): Often, agents leave little “hints” in their environment, or they react to hints left by others. Think of ants dropping pheromones. Positive feedback helps reinforce the good stuff (like a successful path), while negative feedback might steer them away from dead ends.

These core characteristics of swarm intelligence aren’t just cool observations from nature. They are blueprints for building systems that are incredibly flexible, scale up like crazy, and are super resilient qualities that are gold in the world of artificial intelligence.

History Of Swarm Intelligence

Believe it or not, while swarm intelligence and evolutionary computation feel super high-tech, their inspiration comes straight from the ground (and the sky!). The history of swarm intelligence in computing is quite young, really, but it’s built on centuries of observing how living things solve problems collectively.

Early pioneers looked at nature’s solutions and thought, “Hey, can we make a computer do that?”

Ant Colony Optimization (ACO): Back in the early 90s, Marco Dorigo literally looked at ants and how they always seem to find the shortest path to food. He realized it wasn’t about a genius ant leading the way, but about how they leave “scent trails” (pheromones) that others follow. Shorter paths get more traffic, thus stronger pheromones, and boom – an optimal route. Pretty smart for a bunch of tiny insects, right?

Particle Swarm Optimization (PSO): A few years later, in 1995, James Kennedy and Russell Eberhart thought about bird flocks and fish schools. How do they move so gracefully together? They realized individuals learn from their own best experiences and from what the entire group seems to be doing best. That idea became PSO, where little “particles” (our potential solutions) zip around, learning from their own successes and the swarm’s overall progress.

Artificial Bee Colony (ABC): This one’s all about bees and their tireless search for nectar. It models how bees explore new areas, exploit rich sources, and share information to optimize their foraging.

These early algorithms were like the blueprints for a whole new way of thinking about computation. They showed us that drawing inspiration from biology could unlock powerful new ways to solve tough optimization problems, creating a beautiful synergy between nature and code. These aren’t just isolated tricks; they form the very fundamentals of computational swarm intelligence.

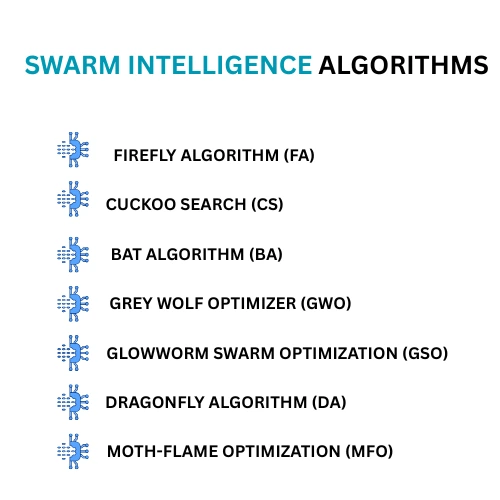

A List of Swarm Intelligence Algorithms

The field has truly exploded since those initial breakthroughs, giving us an amazing list of swarm intelligence algorithms. Each one has its own unique twist, inspired by different natural phenomena, but they all share those core principles of collective, decentralized problem-solving. Beyond the “big three” we just talked about (ACO, PSO, ABC), you might hear about:

Firefly Algorithm (FA): Imagine fireflies flashing. The brighter ones attract others, and this simple rule helps the “swarm” converge on optimal solutions.

Cuckoo Search (CS): A bit more unconventional, this one’s inspired by how cuckoo birds lay their eggs in other birds’ nests. It’s all about clever exploration and exploitation.

Bat Algorithm (BA): Think of bats using echolocation to hunt. The algorithm mimics their sonar-like sensing to find the best spots in a search space.

Grey Wolf Optimizer (GWO): Inspired by the disciplined hunting strategies and social hierarchy within a pack of grey wolves.

Glowworm Swarm Optimization (GSO): This one looks at how glowworms communicate via light intensity to find the best spots.

Dragonfly Algorithm (DA): It actually models both how dragonflies search for food (static swarming) and how they migrate (dynamic swarming).

Moth-Flame Optimization (MFO): Ever noticed how moths seem drawn to flames? This algorithm simulates their unique navigation method to find solutions.

Each of these, and many, many more, offers a fresh perspective on how to tackle tricky problems. They all add to the incredible arsenal of evolutionary and swarm intelligence algorithms, proving just how much we can learn from even the simplest natural behaviors.

Real-Time Example: Optimizing Hyperparameters in Machine Learning

Imagine you’re building a sophisticated machine learning model – say, a neural network – for a task like real-time fraud detection or predicting stock prices.

These models often have numerous “hyperparameters” (things like learning rate, number of layers, regularization strength) that aren’t learned during training but must be set before training.

The performance of your model heavily depends on finding the optimal combination of these hyperparameters. Trying every possible combination is impossible, especially if you need to fine-tune your model frequently based on new data or changing conditions.

This is where Particle Swarm Optimization shines as a “real-time” or near real-time application. A PSO algorithm can be used to dynamically search for the best hyperparameter settings.

How it feels “real-time”: Instead of a human manually tweaking parameters and retraining the model over days, the PSO algorithm continuously explores the parameter space.

It can be set up to run in the background, periodically suggesting better hyperparameter sets based on the model’s performance on validation data.

This allows for adaptive and efficient model tuning, especially in production environments where model performance needs to be consistently optimized as new data streams in.

For example, a trading algorithm might use PSO to fine-tune its risk parameters every hour, or a smart grid might use it to balance energy distribution in response to fluctuating demand.

Internal Working: The “Flocking” Logic of PSO

PSO draws its inspiration from the beautiful, synchronized movements of bird flocks or fish schools. It works like this:

The Swarm and Particles: We start with a “swarm” of multiple “particles.” Each particle represents a potential solution to our problem (in our example, a specific set of hyperparameters). These particles are randomly scattered across the “search space” (the range of all possible hyperparameter values).

Personal Best (pBest): As each particle “flies” through this space, it remembers the best solution (the set of hyperparameters that led to the lowest error or highest accuracy for that individual particle) it has ever found. This is its “personal best” position, often called pBest.

Global Best (gBest): All particles communicate their pBest to the rest of the swarm. The best pBest found by any particle in the entire swarm is then identified as the “global best” position, or gBest. This is the overall best solution found so far by the collective.

Updating Position and Velocity: Now, here’s the clever part. In each “iteration” (or time step), every particle adjusts its “velocity” (its direction and speed of movement) and then updates its “position” based on three factors:

Inertia: Its own previous velocity (a tendency to keep moving in the same general direction).

Cognitive Component: Its attraction towards its own

pBest(a pull back towards what it knows worked well for itself).Social Component: Its attraction towards the

gBest(a pull towards what the entire group has discovered as the best). This balance between its own experience and the swarm’s collective knowledge allows the particles to efficiently explore the search space while also converging towards promising areas.

Iteration: This process repeats for a set number of iterations or until a satisfactory solution is found. Over time, the entire swarm tends to converge around the

gBest, which represents the optimal (or near-optimal) solution to the problem.

It’s a beautiful example of how simple, localized rules, when applied across a group, lead to highly intelligent emergent behavior.

Python Code Example for Basic PSO

Let’s illustrate PSO with a simple Python implementation. We’ll use it to find the minimum of a common mathematical function called the “Sphere function,” which is f(x)=sum_i=1Dx_i2, where D is the number of dimensions. The minimum of this function is 0 at x_i=0 for all i.

import numpy as np

import random

import math

# --- 1. Define the Objective Function (what we want to minimize) ---

# Here, it's the Sphere function: f(x) = sum(x_i^2)

def sphere_function(position):

"""Calculates the fitness value for a given particle position."""

return sum(x**2 for x in position)

# --- 2. Particle Class ---

class Particle:

def __init__(self, dimensions, min_bound, max_bound):

self.dimensions = dimensions

self.min_bound = min_bound

self.max_bound = max_bound

# Initialize position randomly within bounds

self.position = np.array([random.uniform(min_bound, max_bound) for _ in range(dimensions)])

# Initialize velocity randomly

self.velocity = np.array([random.uniform(-abs(max_bound - min_bound), abs(max_bound - min_bound)) for _ in range(dimensions)])

# Particle's best known position and its fitness

self.personal_best_position = np.copy(self.position)

self.personal_best_fitness = float('inf') # Initialize with a very high value for minimization

# Evaluate initial position

self.fitness = sphere_function(self.position)

if self.fitness < self.personal_best_fitness:

self.personal_best_fitness = self.fitness

self.personal_best_position = np.copy(self.position)

def update_velocity(self, global_best_position, w, c1, c2):

"""Updates the particle's velocity based on its pBest and gBest."""

r1 = random.random() # Random cognitive coefficient

r2 = random.random() # Random social coefficient

# Cognitive component: pull towards personal best

cognitive_velocity = c1 * r1 * (self.personal_best_position - self.position)

# Social component: pull towards global best

social_velocity = c2 * r2 * (global_best_position - self.position)

# Update velocity (inertia + cognitive + social)

self.velocity = (w * self.velocity) + cognitive_velocity + social_velocity

# Optional: Velocity clamping (to prevent particles from flying too far)

# max_velocity = (self.max_bound - self.min_bound) * 0.2 # Example: 20% of search space range

# self.velocity = np.clip(self.velocity, -max_velocity, max_velocity)

def update_position(self):

"""Updates the particle's position based on its velocity."""

self.position = self.position + self.velocity

# Ensure particles stay within defined bounds

self.position = np.clip(self.position, self.min_bound, self.max_bound)

# Update fitness and personal best

self.fitness = sphere_function(self.position)

if self.fitness < self.personal_best_fitness:

self.personal_best_fitness = self.fitness

self.personal_best_position = np.copy(self.position)

# --- 3. PSO Algorithm Function ---

def pso_optimize(objective_function, dimensions, min_bound, max_bound,

num_particles=30, max_iterations=100,

w=0.729, c1=1.49445, c2=1.49445):

"""

Implements the Particle Swarm Optimization algorithm.

Args:

objective_function: The function to be minimized.

dimensions (int): The number of dimensions of the search space.

min_bound (float): The lower bound for the search space.

max_bound (float): The upper bound for the search space.

num_particles (int): The number of particles in the swarm.

max_iterations (int): The maximum number of iterations.

w (float): Inertia weight.

c1 (float): Cognitive coefficient (personal influence).

c2 (float): Social coefficient (global influence).

Returns:

tuple: (global_best_position, global_best_fitness)

"""

swarm = []

global_best_position = np.array([0.0] * dimensions)

global_best_fitness = float('inf') # Initialize with a very high value

# Initialize the swarm

for _ in range(num_particles):

particle = Particle(dimensions, min_bound, max_bound)

swarm.append(particle)

# Update global best if this particle is better

if particle.personal_best_fitness < global_best_fitness:

global_best_fitness = particle.personal_best_fitness

global_best_position = np.copy(particle.personal_best_position)

# Main PSO Loop

for i in range(max_iterations):

for particle in swarm:

particle.update_velocity(global_best_position, w, c1, c2)

particle.update_position()

# Update global best again after particles have moved

if particle.personal_best_fitness < global_best_fitness:

global_best_fitness = particle.personal_best_fitness

global_best_position = np.copy(particle.personal_best_position)

# Optional: Print progress

if i % 10 == 0 or i == max_iterations - 1:

print(f"Iteration {i+1}/{max_iterations}: Current Best Fitness = {global_best_fitness:.6f}")

return global_best_position, global_best_fitness

# --- 4. Run the PSO ---

if __name__ == "__main__":

print("Starting Particle Swarm Optimization to find the minimum of the Sphere function...")

# Problem parameters

dimensions = 3 # Number of variables in our function (x, y, z in 3D)

min_bound = -10.0 # Lower bound for each variable

max_bound = 10.0 # Upper bound for each variable

# PSO parameters

num_particles = 50

max_iterations = 200

inertia_weight = 0.7 # w

cognitive_coeff = 2.0 # c1

social_coeff = 2.0 # c2

best_pos, best_fit = pso_optimize(

objective_function=sphere_function,

dimensions=dimensions,

min_bound=min_bound,

max_bound=max_bound,

num_particles=num_particles,

max_iterations=max_iterations,

w=inertia_weight,

c1=cognitive_coeff,

c2=social_coeff

)

print("\n--- Optimization Complete ---")

print(f"Optimal position found: {best_pos}")

print(f"Minimum value found (fitness): {best_fit:.6f}")

print(f"Expected minimum (at [0,0,0]): 0.0")

This code implements the Particle Swarm Optimization (PSO) algorithm to find the minimum value of a given mathematical function, in this case, the simple Sphere function (f(x)=∑xi2).

Here’s a quick rundown of each main part:

sphere_function(position):Purpose: This is the objective function (also called the “fitness function” or “cost function”). It’s the mathematical problem we want to solve or optimize.

How it works: It takes a

position(which is an array of numbers representing a point in our search space) and returns a single number. For the Sphere function, it just sums the squares of all the numbers in thepositionarray. Our goal is to find thepositionthat makes this function’s output as small as possible.

ParticleClass:Purpose: This class defines what an individual “particle” in our swarm is and how it behaves.

Attributes:

position: Where the particle currently is in the search space (our set of numbers).velocity: How fast and in what direction the particle is moving.personal_best_position: The best (lowest fitness) position this individual particle has ever found.personal_best_fitness: The fitness value at thatpersonal_best_position.

Methods:

__init__: Sets up a new particle with a random starting position and velocity.update_velocity: This is the core PSO rule! It calculates how the particle’s velocity should change. It considers:Its own current momentum (

w * self.velocity).Its attraction to its

personal_best_position(c1 * r1 * (pBest - current_position)).Its attraction to the

global_best_positionfound by the entire swarm (c2 * r2 * (gBest - current_position)).

update_position: Moves the particle based on its newvelocityand then updates itspersonal_best_positionif it has found a better spot.

pso_optimize(...)Function:Purpose: This is the main PSO algorithm loop that orchestrates the entire swarm.

How it works:

It first initializes a

swarmofParticleobjects.It keeps track of the

global_best_positionandglobal_best_fitness—the very best solution found by any particle in the entire swarm across all iterations.It then runs for a specified number of

max_iterations. In each iteration:It tells every particle in the

swarmtoupdate_velocity(based on itspBestand the currentgBest).It then tells every particle to

update_position.After all particles have moved, it checks if any particle’s new

personal_best_fitnessis better than the currentglobal_best_fitness, updatinggBestif needed.

This iterative process drives the entire swarm towards the optimal solution.

Main Execution Block (

if __name__ == "__main__":)Purpose: This section sets up the parameters for the problem (e.g., number of dimensions, search bounds) and for the PSO algorithm itself (e.g., number of particles, iterations,

w,c1,c2).How it works: It calls the

pso_optimizefunction with these parameters and then prints out the finaloptimal position(the coordinates where the minimum was found) and theminimum value(the fitness at that position).

In essence, this code simulates a “flock” of “particles” that collaboratively search for the best solution to a problem by learning from their own experiences and the experiences of the entire group.

Explore further into the fascinating world of python by reading my main pillar post: Python For AI – My 5 Years Experience To Coding Intelligence

To Run, Generate, Explain, and Troubleshot Python code use our free AI Python Coding Assistant.

Conclusion:

So, as we’ve journeyed through this fascinating topic, it becomes clear: Swarm Intelligence is way more than just a neat idea.

It’s a game-changer, showing us how surprisingly simple, independent actions, when done by many, can create something incredibly smart and cohesive.

Just think about the quiet wisdom of an ant colony or the breathtaking dance of a bird flock—nature’s had the answers to complex problems tucked away in teamwork all along.

By taking these core principles of swarm intelligence and weaving them into powerful algorithms, we’re genuinely cracking open new possibilities in AI, machine learning, and so much more.

The future of smart systems isn’t just about building bigger, more complicated single brains; it’s increasingly about tapping into the graceful, resilient strength of the collective.

Turns out, true intelligence often blossoms from the wonderfully organized chaos of many working as one.

People Also Ask

Swarm intelligence is a fascinating concept where the collective actions of many simple, decentralized individuals lead to complex, intelligent group behavior. Think of it this way: no single individual (like an ant, a bird, or a small robot) is particularly smart on its own, and there’s no central leader telling them what to do. Instead, by following basic rules and interacting only with their immediate neighbors or local environment, a surprisingly sophisticated “group mind” emerges. This collective intelligence allows the swarm to solve problems, adapt to changes, and perform tasks that would be impossible for any single member alone. It’s essentially smart behavior arising from bottom-up, collaborative chaos.

You can see swarm intelligence all around you!

In Nature:

Ants finding the shortest path to food: They don’t have a map; they just leave pheromone trails. Shorter paths get more traffic, reinforcing stronger trails, eventually leading the entire colony to the most efficient route.

Birds flocking: Thousands of birds move as one fluid entity, turning instantaneously without colliding. Each bird simply follows basic rules like “stay close to your neighbors, but don’t crash into them.”

Fish schooling: Similar to bird flocking, fish move in synchronized patterns to avoid predators or conserve energy.

In Technology (Artificial Swarms):

Optimization Algorithms: Algorithms like Particle Swarm Optimization (PSO) or Ant Colony Optimization (ACO) are used in everything from optimizing delivery routes for logistics companies to fine-tuning parameters in machine learning models (like finding the best settings for a neural network).

Robotic Swarms: Imagine small, autonomous robots collaboratively mapping an unknown area, searching for survivors in a disaster zone, or even constructing structures, all without human intervention or central command. This is a rapidly developing area.

Swarm intelligence relies on a few elegant, core principles that explain how complex behavior emerges from simplicity:

Decentralization: There’s no single leader or central controller. Each agent acts independently, making the system incredibly robust and resilient to individual failures.

Self-Organization: Complex patterns, structures, and behaviors arise spontaneously from the local interactions among agents, without any explicit external instruction or global blueprint.

Local Interactions: Agents only interact with their immediate neighbors or their direct local environment. They don’t need to know what the entire system is doing, only what’s happening right next to them.

Emergent Behavior: The overall “intelligent” behavior of the swarm (e.g., solving a complex problem, finding an optimal path) is not pre-programmed into individual agents, but rather surfaces as a result of their collective, simple interactions.

Feedback: Agents often leave “traces” in their environment (like pheromones or digital markers) or respond to signals from others. This creates positive feedback loops that amplify successful actions, guiding the entire swarm towards better solutions.

Yes, absolutely! Humans can and often do exhibit human swarm intelligence, sometimes called the “wisdom of the crowd.” While individual humans are far more complex than a single ant or particle, when we gather in groups and interact under certain conditions, our collective intelligence can emerge in ways that resemble natural swarms.

Examples include:

Crowdsourcing: Platforms where large groups collectively solve problems, answer questions, or generate ideas (e.g., Wikipedia, predicting election outcomes).

Prediction Markets: Aggregating the opinions of many individuals to predict future events, often outperforming individual experts.

Collective Decision-Making: Groups reaching consensus or making better overall decisions when individual biases are averaged out.

Traffic Flow: The emergent patterns in rush hour traffic, driven by individual drivers making local decisions.

However, it’s important to note that human swarm intelligence often works best when certain conditions are met, such as diversity of opinion, independence of individual judgments, decentralization of decision-making, and a mechanism for aggregating individual inputs. Unlike simpler biological or artificial swarms, humans also possess individual consciousness, complex language, and higher-order reasoning, which can both enhance and sometimes complicate pure swarm dynamics

Stay ahead of the curve with the latest insights, tips, and trends in AI, technology, and innovation.